Trajectory and Sway Prediction for Fall Prevention

Challenge

- Conducted research with the Stanford Assistive Robotics and Manipulation Laboratory (ARMLab) to develop a wearable sensor that can predict a person’s path and stability, then alert them of possibile falls in real time.

- Intended users are older individuals who are at higher risk of instability and falls.

- Prior data collection setup involved multiple pieces of hardware. I aimed to simplify the setup by reducing the number of parts while maintaining performance.

Action

- Developed an iOS application in Swift (programming language) that leverages the LiDAR sensor in iPhones to collect environment data and generate a depth panorama

- The depth panorama is used as input to a VAE + LSTM trajectory and sway prediction model

Result

- Application demo (below) shows the generation of the depth panorama in real time as a person walks down a hallway. The FPS on the screen needs to be multiplied by the number of GPUs (6 for the iPhone 12 Pro that the testing was conducted on)

- PyTorch prediction model has been converted to CoreML and will eventually be deployed on the iPhone for an integrated data collection and prediction process

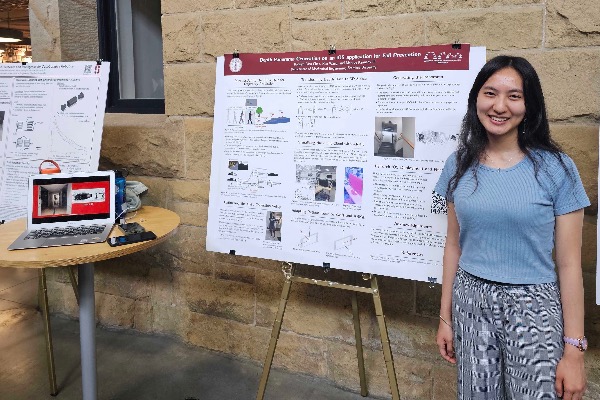

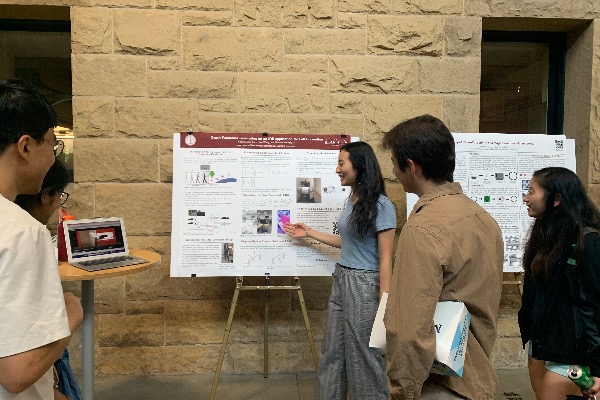

Research Presentations

- SURI Poster Session, August 2023: Presented summer research progress to Stanford mechanical engineering labs and the SURI cohort

- Innovation and Discovery Expo, October 2023: Presented to biosciences, engineering, and medical researchers as part of the ARMLab demonstrational booth. The expo was hosted by Stanford Bio-X and Wu Tsai Human Performance Alliance.